Accelerate AI and Graphics Performance

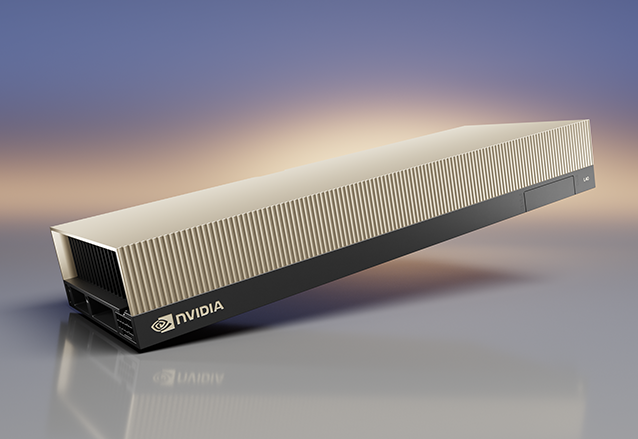

ASUS is a select NVIDIA OVX server system provider and experienced and trusted AI-solutions provider, with the knowledge and capabilities to bridge technology chasms and deliver optimized solutions to customers.

Learn More